#conversational #interface #convercon

19. Sept. 2017 |

- min Lesezeit

There are some solutions for chatbots available on the market. In this blog article, we will focus on Api.ai and Amazon’s Lex.

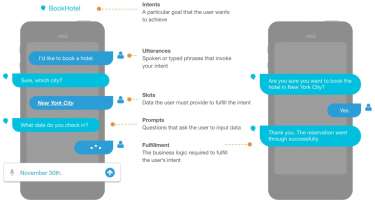

Amazon Lex takes user utterances as an input and helps to form valid requests out of them. These can be sent to an AWS Lambda function to finally fulfil the user’s intent. Doing this, the system uses the logic of Amazon Alexa. The whole process requires the following steps.

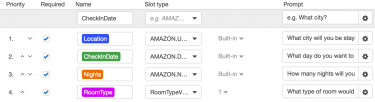

Optionally, a custom Lambda function can be configured to validate the user’s input.

Confirmation: When all necessary data is collected, a final question for confirmation can be included. If neglected, the whole booking process gets aborted.

Fulfillment: In the last step, the system either just returns the clients’ parameters for testing purposes or hands them over to an AWS Lambda function which fulfills the final request.

There is also an option to include prompts for error handling. In this case the bot can for example ask for clarification (you can preconfigure how many times that can happen in a row) or refer to customer service.

The basic principles are very similar to Amazon’s Lex. You set up your agent/bot, create intents, configure sample utterances (“user says”) and define parameters, actions and responses. These steps represent the above-mentioned structure of the compared service. What’s interesting is that it doesn’t stop there. Api.ai offers way more options to build your chatbot. Moreover, this additional functionality comes without any loss of diminished usability. The system is still quite intuitive and easy to use. In the following I will go over the same bullet points as I did above just mentioning major differences in the api.ai implementation.

Building the intent: Intents are not restricted to be mentioned specifically by the user as they can also be reactions to events. An example for this: Opening the chat application as well as the bot’s channel and receiving a welcoming message without even texting to the bot in the first place. Not to misunderstand: Obviously, this can also also be achieved using Amazon Lex. The difference is, API.ai offers this functionality as a part of their visual UI.There is also a training section (currently in beta) allowing you to enhance the intent classification by giving more examples for the “User Says” section. The more variations for questions, the better Api.ai can understand the user.

Slots: The basic principle is almost the same. Just one exception: The responses. You do not just define a prompt to enter requested data, Api.ai makes it way easier to build an actually pleasant conversation. You can enter multiple responses each containing different parameters. Depending on what data the user has already provided, the corresponding question is asked. To clarify: When asking for how many nights the user wants to stay in the hostel you can enter “For how many nights do you want to stay” as a fall-back, but also include questions which include data the user provided before. The question which contains the most parameters gets chosen out of all actually available questions and gets sent to the user. Parameters are saved in the context and may be used for other intents as well. Other ways of responding are actions on Google, Facebook Messenger, Telegram, Kik, Viber or Skype.

Fulfillment: The main difference is that you are not limited to AWS as you can enter a webhook URL without any restrictions. This can be a web service you built yourself or a third-party API. The only condition is that the webhook requirements are met.

If you have already set up your workflow with the Amazon services or developed a skill for Alexa it is probably easier to get familiar with Lex. It just offers a few steps to organize the users input in such a way that your Lamda function can deal with it. To summarize: It’s all about building a valid request out of a user query.

Api.ai offers more options for you to build your chatbot (or voice interface) and manages to pack them into a user interface that looks cool, is easy to use and very intuitive. Additionally, the documentation is very nice. But in this regard Amazon shouldn’t be the one to look up to anyway.